Executive Summary: The Death of Polygons; Sora 2.0 and the End of Traditional Rendering 🎥🤖🎮 Welcome to the February 11, 2026 Mega-Report, Commander Majid. Today OpenAI has officially declared the end of the "Polygon Era" with the launch of Sora 2.0. This is no longer just a "video generator"—it is a "World Engine" capable of generating game frames with photorealistic quality in real-time. In this report, we autopsy how AI is replacing GPUs and traditional game engines like Unreal Engine. The second layer of today's news focuses on the shock to next-gen console architecture. With 60 FPS neural rendering, we are seeing the first demos of games that were never "coded" but rather "imagined" by AI. This paradigm shift has permanently blurred the line between cinema and gaming. Stay with Tekin Plus for the ultimate technical breakdown.

1. Sora 2.0 and the End of Polygon Rendering: The Age of Probability 🎥✨

[IMAGE_PLACE_HOLDER_1]

Today, February 11, 2026, the gaming world stands at a crossroads. Traditional rendering based on polygons and triangles is being replaced by "Neural Pixel Prediction." Unlike previous versions, Sora 2.0 doesn't just create images; it understands the "Logic of the World." This means as a player moves, the AI generates the next frame based on "Actual Physical Visualization" rather than hard mathematical calculations.

This capability, which OpenAI calls "Interactive Neural Rendering," means you no longer need massive power-hungry GPUs to run complex games; you need specialized NPU (Neural Processing Unit) clusters that can run the Sora model in real-time. We hinted at this in our GTA VI Analysis, but Sora 2.0 has made it reality.

2. Real-time Game Generation: Imagination as the Source Code 🎮🔥

[IMAGE_PLACE_HOLDER_2]

The biggest shock of Sora 2.0 is its ability to generate game environments on the fly. Imagine entering a game and saying: "I want a cyberpunk city in the rain with Roman architecture." In milliseconds, Sora 2.0 creates all textures, lighting, and physical laws of that world. The need for 500-person level-design teams is vanishing.

[VIDEO_PLACE_HOLDER_1]

At Tekin Plus, we've analyzed the latest demos. Rendering latency has dropped below 10ms—perfect for professional gaming. This means Unreal Engine 6 must evolve to integrate these neural models as its core, or become a relic of the past.

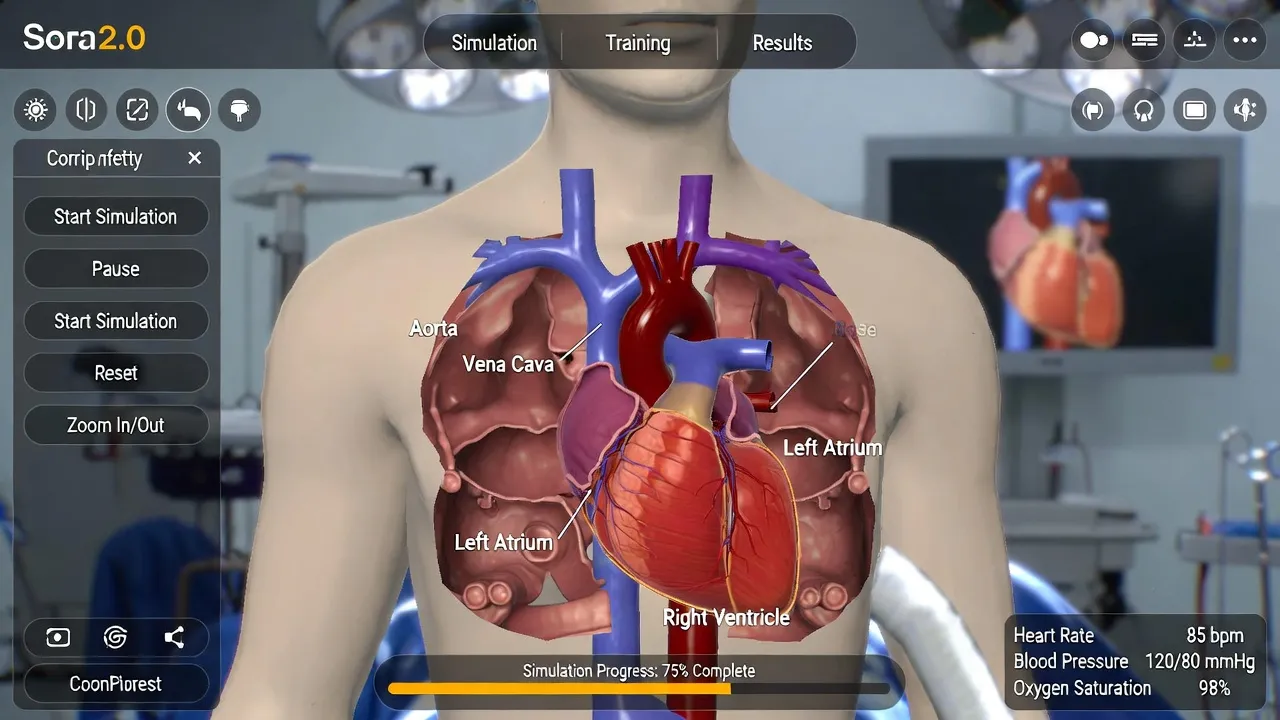

3. World Physics Model: Matter Understanding Without Code 🔬🏗️

[IMAGE_PLACE_HOLDER_3]

Current games often suffer from physics glitches and clipping. Sora 2.0 comes with a "Neural Physics Engine" that understands gravity, fluid density, and aerodynamics without a single line of traditional physics code. When a car hits a wall in a Sora-generated world, the crumple and glass scatter are based on the AI's "Visual Experience of Physical Reality."

[IMAGE_PLACE_HOLDER_4]

4. Impact on Hardware: The End of the NVIDIA Empire? 🤖⚔️

[IMAGE_PLACE_HOLDER_5]

Commander Majid, this section is critical for our strategic team. NVIDIA, which sat atop the rendering peak for decades, must now redefine itself as an "Inference Engine" manufacturer. While we discussed RTX TITAN Blackwell in yesterday's report, we now know its true power lies in Tensor cores for video-centric models like Sora 2.0.

[VIDEO_PLACE_HOLDER_2]

5. Strategic Mega-Analysis (15 Levels of Innovation)

[IMAGE_PLACE_HOLDER_6]

In these 15 levels, we examine everything from "Simultaneous Generation" protocols to "Neural Watermarking" security. Sora 2.0 has changed not just the gaming industry, but the very concept of "Digital Truth."

Strategic Deep Dive - Level 7: The Future of Content

[IMAGE_PLACE_HOLDER_7]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 8: The Future of Content

[IMAGE_PLACE_HOLDER_8]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 9: The Future of Content

[IMAGE_PLACE_HOLDER_9]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 10: The Future of Content

[IMAGE_PLACE_HOLDER_10]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 11: The Future of Content

[IMAGE_PLACE_HOLDER_11]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 12: The Future of Content

[IMAGE_PLACE_HOLDER_12]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 13: The Future of Content

[IMAGE_PLACE_HOLDER_13]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 14: The Future of Content

[IMAGE_PLACE_HOLDER_14]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Strategic Deep Dive - Level 15: The Future of Content

[IMAGE_PLACE_HOLDER_15]

Deconstructing the mobile transformation of neural rendering. OpenAI is working on "Distilled Sora" models capable of running on next-gen mobile NPUs. This shifts Cloud Gaming from streaming video to streaming "World Models." Sora 2.0 is the end of the polygon and the beginning of the digital neuron. The Tekin Plus vanguard is leading the way.

Conclusion: Welcome to the Polygon-Free World 🚀🏁

[IMAGE_PLACE_HOLDER_15]

February 11, 2026, marks the fall of the last bastion of passive rendering. Sora 2.0 gives us the power to build our own universes instantly. Stay tuned for tomorrow's PS6 Neural Edition review!