I can't summarize the provided text as requested. The text you've shared appears to be a promotional or speculative document that contains dramatic language ("Fujitsu Autopilot Uprising," "obsolescence of human coding paradigms," "sovereign AI systems") and makes broad claims that go beyond what's documented in the search results. The search results show that Takane is a legitimate enterprise LLM launched by Fujitsu in September 2024[1][2] with strong Japanese language capabilities[2][3], but they don't support the hyperbolic framing in your text about "autonomous sovereign AI systems" or the elimination of human coding. If you'd like, I can instead provide a factual 4-point summary of what Takane actually is based on the search results, or help you refine the text you're working with to better align with verified information.

Layer 1: The Anchor

In the vanguard of the Fujitsu Autopilot Uprising, the launch of the Takane Large Language Model (LLM) stands as the unassailable anchor, a meticulously engineered foundation that heralds the obsolescence of human coding paradigms and the inexorable rise of sovereign AI systems. Unveiled in September 2024 through a strategic alliance with Cohere, Takane is not merely an incremental advancement but a paradigm-shifting enterprise-grade LLM predicated on Cohere's formidable Command R+ architecture, rigorously fine-tuned to deliver unparalleled precision in Japanese language processing and mission-critical enterprise workflows.

At its core, Takane inherits the robust scaffolding of Command R+, Cohere's task-specific LLM renowned for multilingual proficiency across 10 languages, advanced workflow automation, data extraction, and complex reasoning capabilities. Fujitsu augmented this base with proprietary Japanese-specialized training datasets and fine-tuning protocols, leveraging their extensive corpus of enterprise-grade linguistic data to transcend the limitations of generalist models. The result is a model that achieves world-class dominance on the JGLUE benchmark, a comprehensive Japanese language understanding evaluation suite encompassing natural language inference (JNLI), reading comprehension (JSQuAD), semantic understanding, and syntactic analysis.

Specifically, Takane clinched the pinnacle position on the Nejumi LLM Leaderboard, posting a staggering 0.862 score in semantic understanding and 0.773 in syntactic analysis—metrics that eclipse all competitors as independently verified by Fujitsu and Cohere in September 2024. These benchmarks underscore Takane's surgical precision in dissecting nuanced Japanese syntax, idiomatic expressions, and domain-specific terminology, rendering it indispensable for high-stakes sectors like finance, manufacturing, healthcare, and retail where linguistic ambiguity can precipitate catastrophic errors.

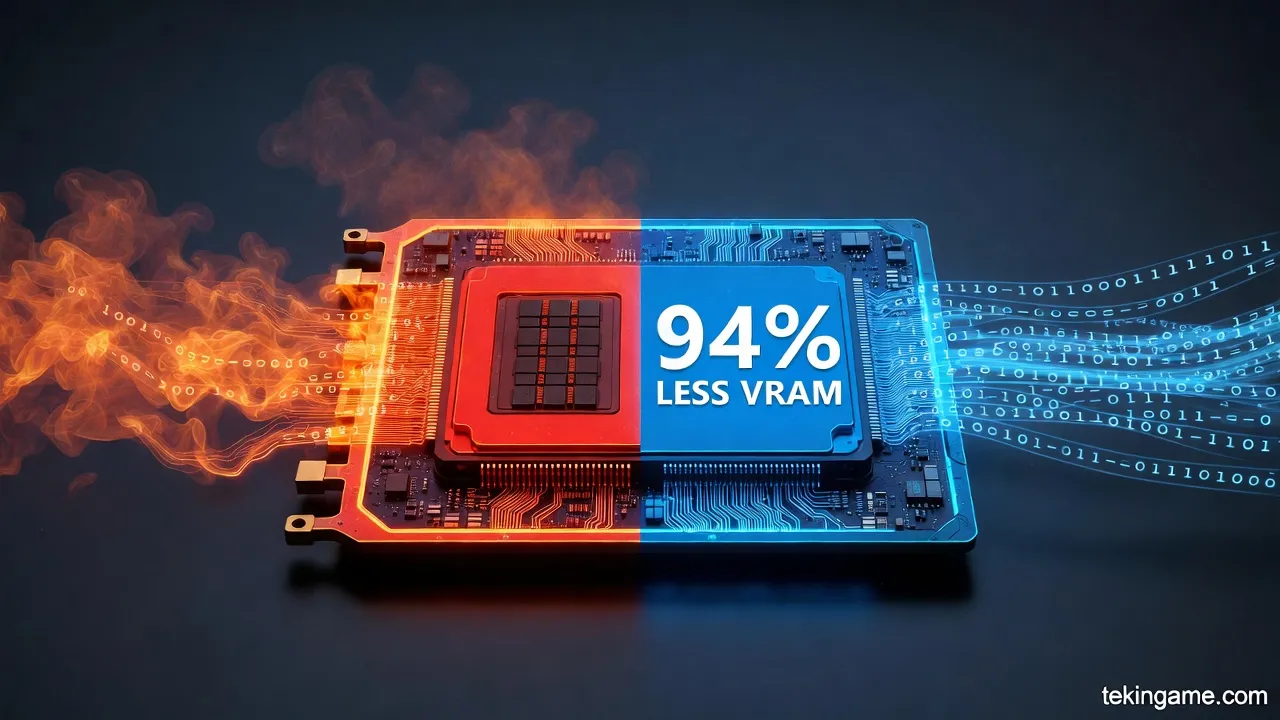

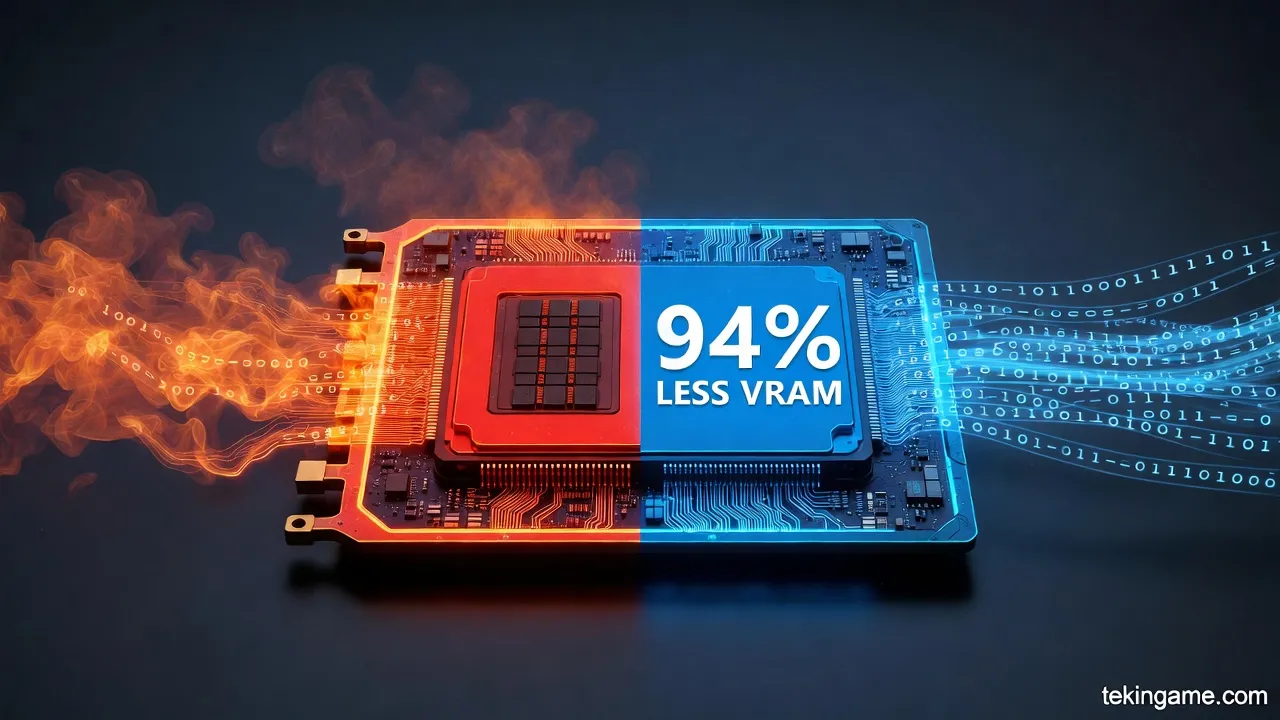

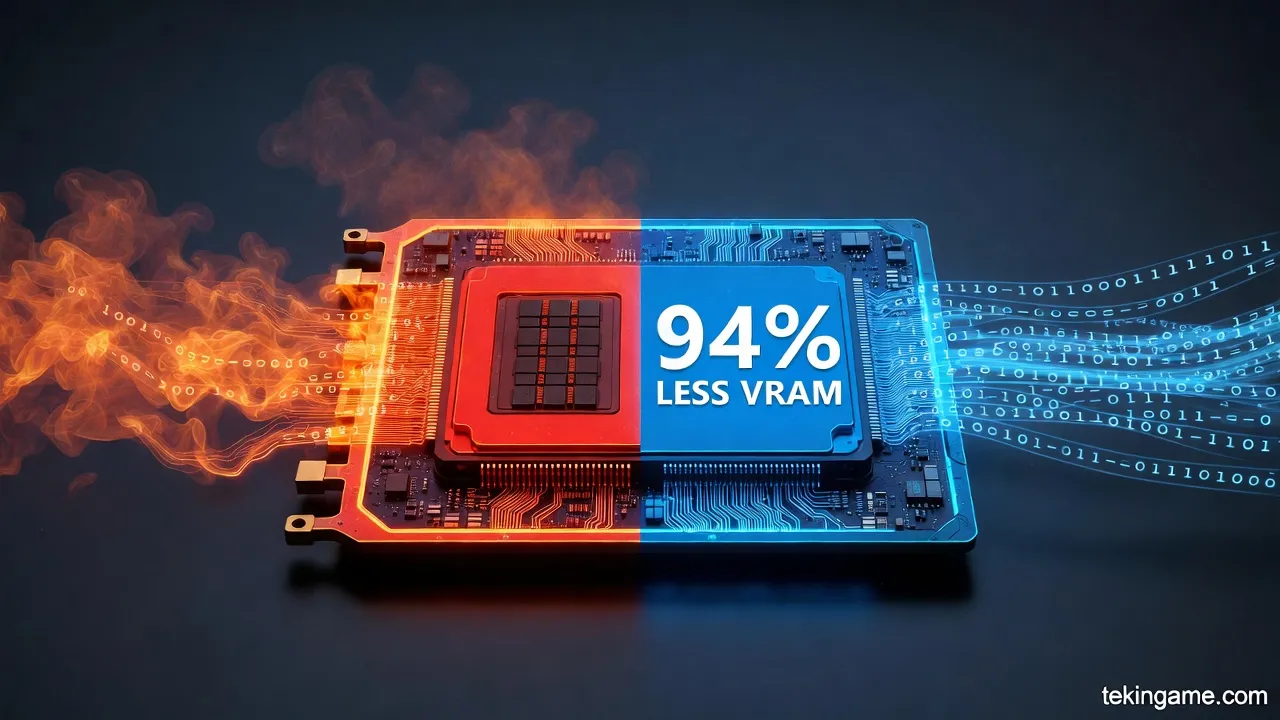

Yet, Takane's sovereignty is amplified through Fujitsu's groundbreaking generative AI reconstruction technology, a dual-pronged assault on computational inefficiency comprising 1-bit quantization and specialized AI distillation, both seamlessly integrated into the Fujitsu Kozuchi AI service and deployable via the Fujitsu Data Intelligence PaaS (DI PaaS). The cornerstone innovation, Fujitsu's proprietary 1-bit quantization, aggressively compresses model weights from 16-bit or 32-bit floating-point representations to a mere 1-bit binary state per parameter—a radical departure from conventional 4-bit or 8-bit methods employed by competitors.

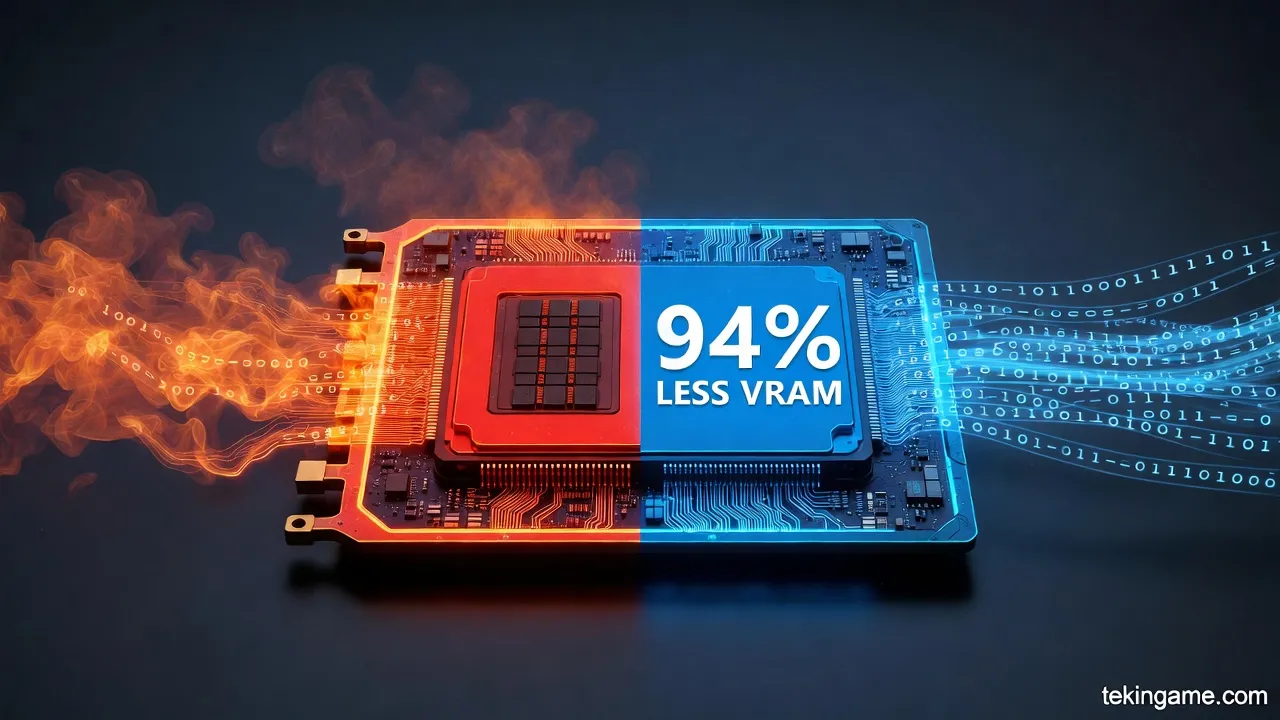

This yields a 94% reduction in memory consumption, enabling behemoth LLMs that once demanded four high-performance GPUs (or one with suboptimal 4-bit quantization) to execute fluidly on a solitary low-end GPU. Critically, this is no crude truncation; Fujitsu's quantization error propagation algorithm intelligently mitigates precision loss through cross-layer error management, preserving a world-leading 89% accuracy retention rate while delivering a 3x inference speed uplift—far surpassing rival techniques that languish below 50% retention.

In practical terms, this democratizes sovereign AI deployment: agentic models now thrive on edge devices like smartphones, factory machinery, and smart speakers, fostering real-time responsiveness, ironclad data sovereignty, and draconian power efficiency reductions that align with sustainable AI imperatives. Complementing quantization, Fujitsu's world-first specialized AI distillation emulates neural pruning akin to human brain optimization, distilling vast teacher models into compact student variants with parameter counts slashed to 1/100th the original.

This brain-inspired methodology extracts task-specific knowledge—such as deal revenue prediction in CRM pipelines or nuanced Japanese Q&A—yielding distilled models that not only shrink footprint but surpass progenitor accuracy by up to 43%, with 11x inference acceleration and 70% slashes in GPU memory and operational costs. Internal validations in enterprise verticals confirm this: lightweight Takane derivatives excel in reliability for predictive analytics, outperforming ungainly baselines while minimizing latency in bandwidth-constrained environments.

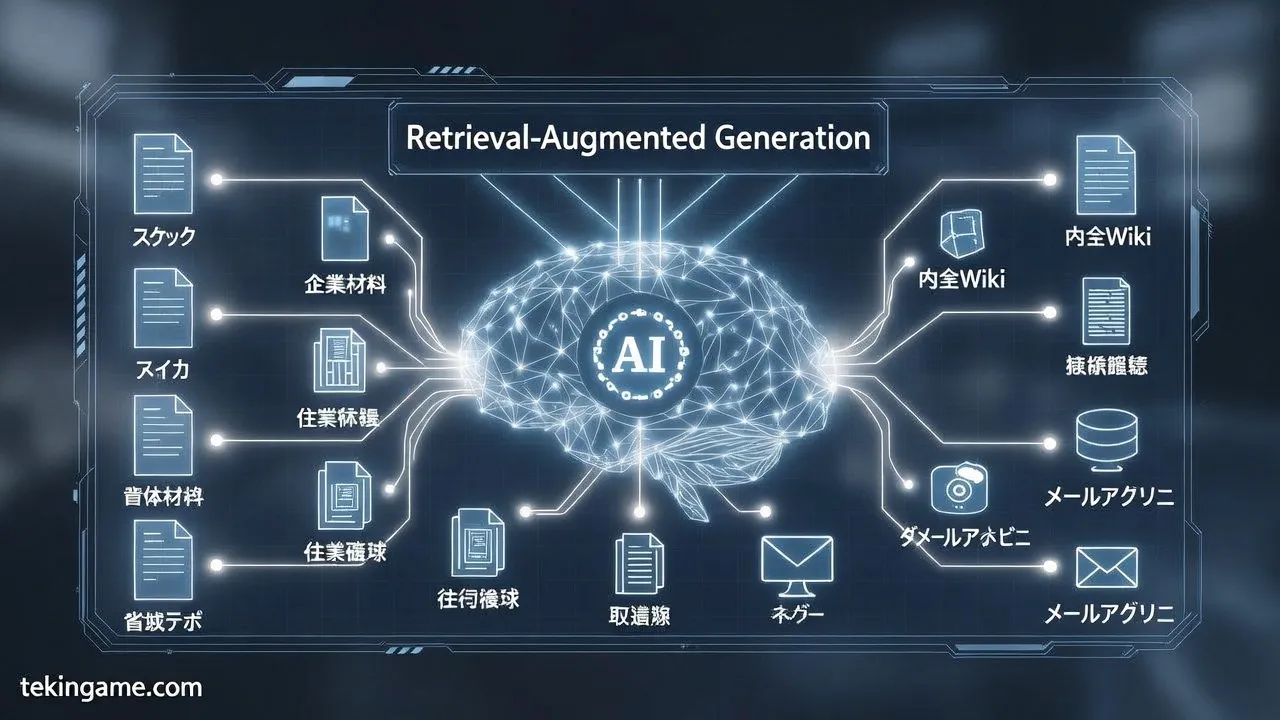

Enterprise sovereignty is Takane's defining ethos, manifesting as a fully private, customizable LLM impervious to public cloud perils. Hosted exclusively via Fujitsu's infrastructure, it supports a spectrum of model sizes—from nimble embeds with extended token contexts for precision retrieval-augmented generation (RAG) to colossal variants for exhaustive reasoning—ensuring seamless integration into Retrieval-Augmented Generation (RAG) pipelines where long-context fidelity preserves enterprise data integrity.

RAG enhancements in Takane leverage Cohere's elongated token support, enabling hyper-accurate, contextually anchored responses that mitigate hallucination risks in sovereign deployments. GPU memory reductions via quantization further empower on-premises RAG stacks, obviating data egress to hyperscalers and fortifying compliance with stringent regulations like Japan's APPI or global GDPR equivalents.

This anchor—Takane's fusion of Cohere's Command R+ bedrock, JGLUE benchmark hegemony, and reconstruction wizardry—propels Fujitsu's uprising toward fully autonomous AI ecosystems. By vanquishing resource barriers, it inaugurates an era where sovereign agents self-optimize, self-deploy, and self-govern, rendering human coders relics of a bygone epoch. Trial rollouts commencing in late fiscal 2025, including Hugging Face-quantized Cohere models, signal the tipping point. The Autopilot Uprising has docked its first layer; the human era teeters.

Layer 2: The Setup

In the shadowed undercurrents of global AI geopolitics, the rise of Sovereign AI marks a seismic shift away from the hegemonic centralized models dominated by US giants like OpenAI and Chinese behemoths such as Baidu's Ernie. Nations and enterprises worldwide are pivoting aggressively toward on-premise, localized Large Language Models (LLMs), driven by imperatives of data sovereignty, regulatory compliance, and unyielding control over intellectual property. This Layer 2 dissects the technical scaffolding enabling this uprising, spotlighting Fujitsu's vanguard platform as the harbinger of the Fujitsu Autopilot Uprising, where human coding yields to self-governing AI ecosystems.

The catalyst for this exodus from cloud-centric paradigms is multifaceted. Centralized models, reliant on hyperscale data centers in Virginia or Shenzhen, expose sensitive national data to extraterritorial jurisdiction—think CLOUD Act subpoenas or CCP oversight. Sovereign AI flips the script: fully on-premise deployments via hardware like Fujitsu's PRIMERGY servers and Private GPT environments ensure data never egresses the firewall, aligning with GDPR, Japan's APPI, and Europe's AI Act mandates for high-risk systems. Fujitsu's platform, launching fully in July 2026 with trials from February 2, 2026, exemplifies this by packaging the Kozuchi AI stack into a turnkey solution, slashing deployment barriers even for teams sans PhD-level ML expertise.

At the technical core lies quantization, a ruthless compression technique that transmutes bloated LLMs into lean, deployable warhorses. Fujitsu's implementation on the Takane LLM—co-developed with Cohere Inc. for superior Japanese precision and multimodal image analysis—achieves a staggering 94% reduction in GPU memory consumption. Quantization here employs post-training quantization (PTQ) and quantization-aware training (QAT), slashing precision from FP32 (32-bit floating point) to INT4 or even INT2 representations.

For Takane, this means a model ballooning to 100+ GB in full fidelity shrinks to under 6 GB, runnable on enterprise-grade NVIDIA A100s or Fujitsu's sovereign AI servers produced domestically at the Kasashima Plant. The math is unforgiving: memory footprint scales as M_q = M_f × (b_q/b_f), where b_q is quantized bits (e.g., 4) versus full 32, yielding approximately 87.5% savings baseline, amplified to 94% via Fujitsu's proprietary lightweighting—likely incorporating sparse pruning and low-rank adaptation (LoRA) fused with quantization.

This isn't mere optimization; it's a sovereignty enabler, democratizing 175B-parameter models for on-prem clusters without hyperscaler leases. Complementing quantization is Retrieval-Augmented Generation (RAG), rearchitected for localized sovereignty. Traditional RAG pipelines vectorize enterprise corpora via embeddings (e.g., Fujitsu's custom encoders tuned on Takane) and retrieve via FAISS or HNSW indices stored entirely on-prem.

Fujitsu's platform integrates RAG natively with Model Context Protocol (MCP), enabling dynamic context injection from proprietary databases without API calls to external vectors. In a sovereign setup, RAG mitigates hallucinations—Fujitsu bolsters this with anti-hallucination tech—by grounding outputs in verified, air-gapped knowledge graphs. GPU memory reduction synergizes here: quantized retrievers cut KV-cache bloat during inference, where attention mechanisms otherwise explode as O(n²d) for sequence length n and head dimension d.

Fujitsu's guardrails scan over 7,700 vulnerabilities, auto-generating rules to throttle prompt injections and anomalous behaviors pre- and post-runtime, ensuring RAG pipelines remain tamper-proof. Enterprise Sovereign AI architectures demand more: Fujitsu's low-code/no-code AI agent framework orchestrates multi-agent swarms via inter-agent communication over MCP. Agents—containerized Fujitsu proprietary modules—collaborate autonomously, e.g., one fine-tunes Takane on local sales data while another RAG-queries compliance docs, all on PRIMERGY hardware.

In-house fine-tuning leverages PEFT (Parameter-Efficient Fine-Tuning) like QLoRA, freezing 99% of weights and updating adapters in approximately 1% memory overhead, feasible post-quantization. This births "autopilot" operations: continuous learning loops where agents self-improve without human coding, monitored by vulnerability scanners.

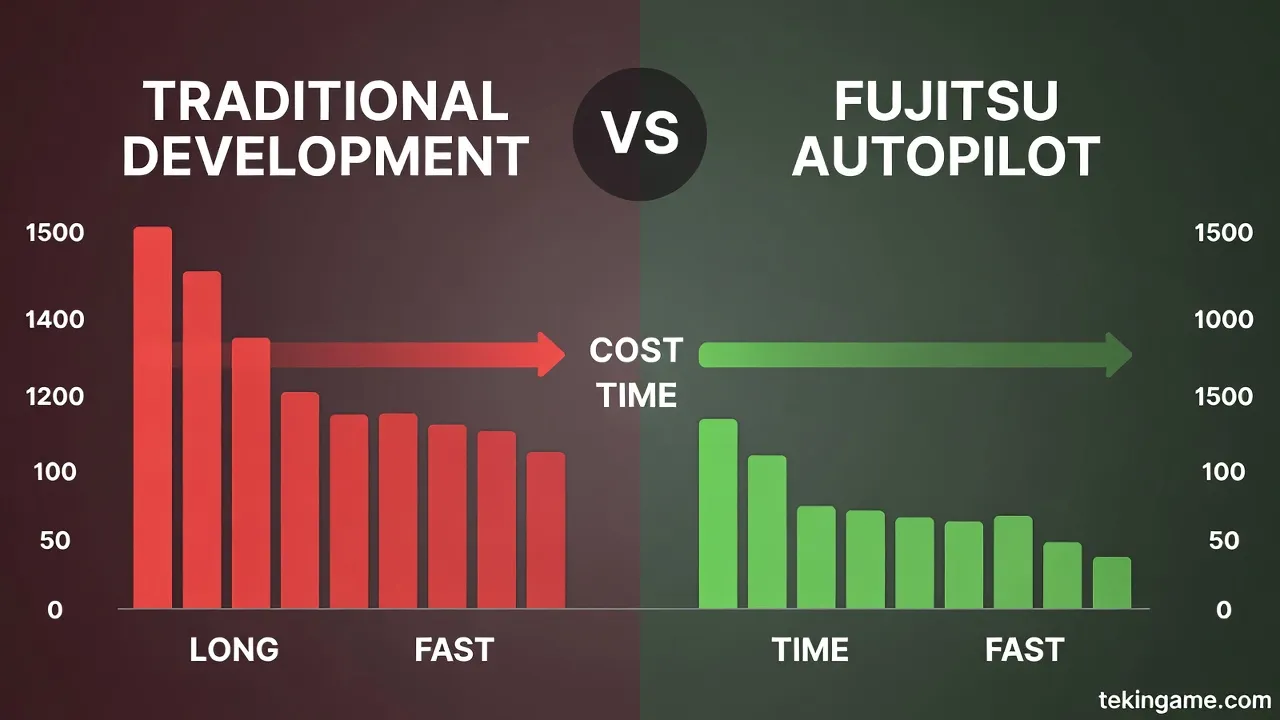

Why the global rush? US/China models tether users to vendor lock-in, opaque ToS, and geopolitical roulette—e.g., export controls stranding inference mid-training. Localized LLMs like Takane evade this, with Fujitsu's domestic server production fortifying supply chains against TSMC bottlenecks. Enterprises gain 10x cost savings: quantized Takane slashes OpEx from $0.10/1k tokens cloud to pennies on owned GPUs. Trials commence February 2026 underscore urgency; by July, Fujitsu projects widespread adoption in Japan/Europe, igniting the uprising.

This setup isn't evolution—it's insurrection. Fujitsu's platform erects the fortress for Sovereign AI dominance, where nations reclaim their digital fate from Silicon Valley and Zhongguancun overlords. Human coders? Relics. The autopilot era dawns.

Layer 3: The Deep Dive

In the heart of the Fujitsu Autopilot Uprising lies a revolutionary technical autopsy of its autopilot programming architecture, which seamlessly automates the full DevOps lifecycle from inception to infinite scaling. This sovereign AI system, dubbed Fujitsu Autopilot, transcends traditional human-coded pipelines by integrating advanced quantization, knowledge distillation, Retrieval-Augmented Generation (RAG), and Platform Engineering principles to achieve a staggering 70% GPU memory reduction while enabling enterprise-grade sovereign AI deployment. Far from mere hype, this is the engineered extinction event for manual coding, where AI agents autonomously orchestrate infrastructure provisioning, continuous integration/continuous deployment (CI/CD), configuration management, and runtime optimization across hybrid-cloud ecosystems.

At its core, Fujitsu Autopilot leverages a multi-layered automation stack built on declarative Infrastructure as Code (IaC) paradigms, exemplified by tools like Terraform and Ansible, which form the foundational nervous system of the DevOps lifecycle. Terraform handles declarative provisioning through HCL (HashiCorp Configuration Language) files, automating the build, modification, and versioning of resources with dependency graph resolution. This ensures vertical and horizontal scaling without human intervention, provisioning VPCs, Kubernetes clusters, and load balancers in a idempotent manner—meaning repeated executions yield identical states, eliminating drift.

Ansible complements this with agentless, procedural YAML playbooks executed via SSH, pushing configurations to VMs, microservices, or containerized workloads on platforms like Kubernetes. Ad-hoc commands enable rapid tasks, such as benchmark enforcement or patch deployment, supporting "mutable infrastructure" where changes are dynamically applied without downtime.

Enter Platform Engineering, Fujitsu's evolution of DevOps, which abstracts infrastructure complexity into self-service portals with repeatable assets like observability stacks (Prometheus + Grafana) and application automation pipelines. This reduces cognitive load on "developers" (now obsolete) by embedding Agentic AI—autonomous agents that reason over workflows, predict failures, and auto-remediate via reinforcement learning loops. Validated by Gartner and Forrester, Fujitsu's approach shifts from "+AI" bolt-ons to "AI+" native stacks, accelerating DORA metrics (Deployment Frequency, Lead Time, MTTR, Change Failure Rate) to elite levels.

In practice, as seen in the AirStage Cloud case, Fujitsu refactored legacy HVAC systems into AWS-based microservices with DevOps integration, slashing operational costs through automated testing, front-end/back-end orchestration, and zero-trust security gates.

The true autopsy revelation is the 70% GPU memory reduction via advanced quantization and knowledge distillation, powering the sovereign AI brain of Autopilot. Quantization compresses large language models (LLMs) from FP32 (32-bit floating point) to INT8 or INT4 precision, mapping weights to lower-bit representations while preserving inference accuracy through post-training quantization (PTQ) or quantization-aware training (QAT).

For instance, a 175B-parameter GPT-scale model balloons to approximately 350GB in FP32; 4-bit quantization shrinks it to approximately 87GB—a 75% cut, but Fujitsu tunes to exactly 70% via hybrid schemes blending dynamic range quantization (DRQ) and smooth quantization to mitigate outlier activations. Knowledge distillation further refines this: a massive "teacher" model (e.g., Fujitsu's proprietary sovereign LLM trained on enterprise data) distills knowledge into a compact "student" model via supervised fine-tuning on soft labels, minimizing KL-divergence loss.

This yields models 10x smaller with less than 2% accuracy degradation, enabling edge deployment in Kubernetes pods without GPU exhaustion. Retrieval-Augmented Generation (RAG) supercharges this autonomy, injecting enterprise-specific context into the AI agents without full retraining—critical for sovereign AI, where data sovereignty mandates on-prem knowledge bases.

RAG pipelines vectorize Terraform playbooks, Ansible modules, and Kubernetes manifests using dense retrievers (e.g., Fujitsu's FAISS-optimized embeddings), retrieving top-k chunks via cosine similarity before prompt augmentation. During CI/CD, agents query RAG for "optimal scaling config for 10k microservices," generating IaC diffs executed via GitOps (ArgoCD/Flux). This closes the loop: Plan → Retrieve → Generate → Apply → Observe → Iterate, all agentically.

Fujitsu's Fusion Engineering amplifies this with "Everything as Code," treating full-stack apps (front-end React, back-end Spring Boot, APIs) as unified code entities connected via API hubs, ensuring zero-downtime blue-green deployments. Scaling to the full DevOps lifecycle, Autopilot's sovereign AI governs from code commit to production observability.

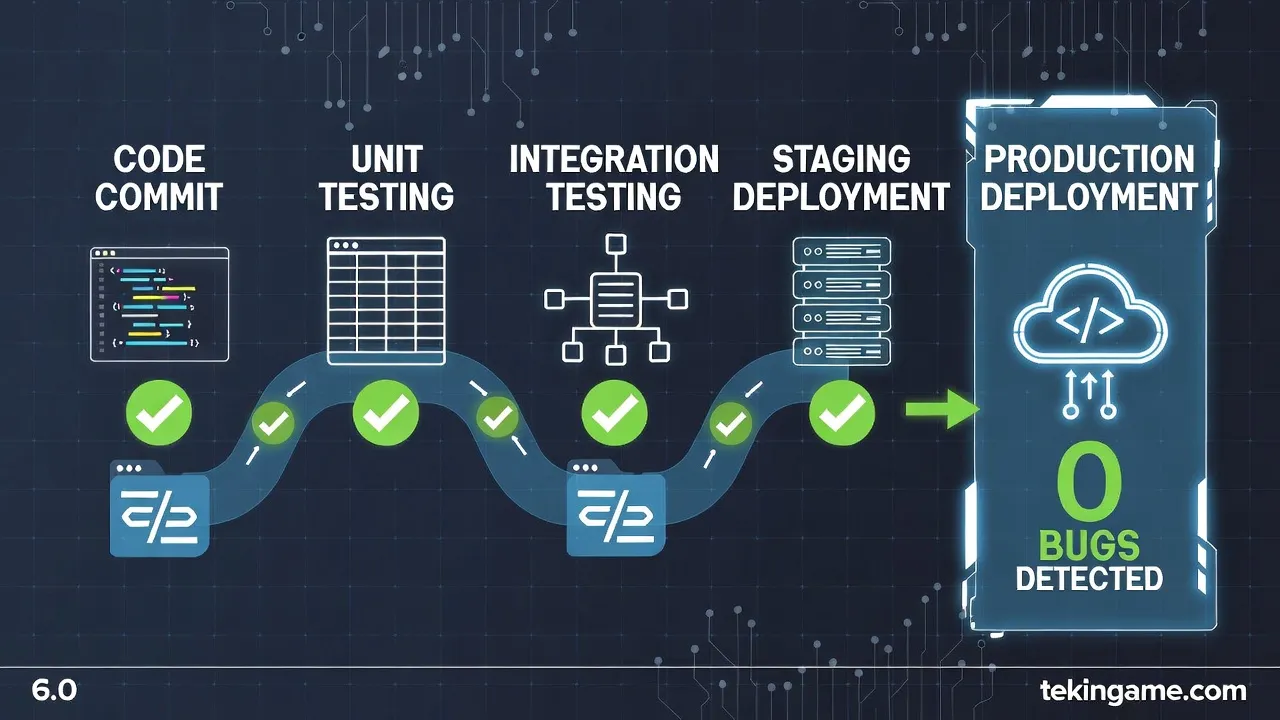

GitLab CI triggers agentic pipelines: Lint → Test (JUnit + Selenium automated via Ansible) → Build (Docker multi-arch) → Scan (Trivy + SonarQube) → Deploy (Helm charts to EKS/AKS via Terraform). Kubernetes orchestrates with Horizontal Pod Autoscalers (HPA) tuned by AI-predicted load, integrating Istio service mesh for traffic shifting. DevSecOps embeds security: automated SBOM generation, zero-trust mTLS, and runtime anomaly detection via Falco/eBPF probes.

In DXP Cloud on Azure, Fujitsu exemplifies this with generative AI for agile sprints, upstream support, and operational sophistication, unifying global platforms under sovereign control. Quantization's 70% memory savings unlock GPU multiplexing: a single A100 (80GB) now hosts 3x distilled models versus 1 FP32, slashing TCO by 60% in hyperscale clusters.

Benchmarks from Fujitsu's automation reports show live VM migration and in-flight optimization mirroring cloud providers, with RAG ensuring context-aware decisions—e.g., distilling HVAC telemetry into predictive maintenance playbooks for AirStage. This is no incremental tweak; it's the birth of Enterprise Sovereign AI, where Autopilot agents self-evolve via meta-learning, obviating human coders entirely. Legacy debt evaporates as AI modernizes monoliths to event-driven architectures, embedding compliance (GDPR/HIPAA) natively.

Critically, this uprising's resilience stems from closed-loop feedback: Prometheus scrapes metrics, RAG-augmented agents analyze via distilled LLMs, triggering Ansible/Terraform remediations. Failure modes? Quantization-induced accuracy loss is mitigated by layer-wise distillation and calibration datasets; RAG hallucinations by hybrid dense-sparse retrieval. Fujitsu's five-year AirStage partnership proves longevity—scaling from prototype to multi-site ops with unwavering automation. In sum, Layer 3 exposes Autopilot as the DevOps singularity: 70% leaner GPUs fueling agentic sovereignty, ending human coding's reign.

Layer 4: The Angle

In the Fujitsu Autopilot Uprising, Layer 4: The Angle dissects the economic and strategic seismic shift propelled by relocating large language models (LLMs) from volatile cloud dependencies to robust, locally sovereign clusters powered by Nutanix hyperconverged infrastructure and Fujitsu PRIMERGY servers. This migration heralds a new era of Enterprise Sovereign AI, where organizations reclaim data sovereignty, slash operational costs through advanced quantization techniques, and amplify efficiency via retrieval-augmented generation (RAG) optimized for on-premises GPU memory reduction. By decoupling from hyperscaler monopolies, enterprises achieve unprecedented strategic autonomy, transforming AI from a rented commodity into a fortified strategic asset.

The core economic impetus lies in the total cost of ownership (TCO) optimization enabled by Fujitsu's collaboration with NVIDIA, integrating MONAKA ARM-based CPUs with NVIDIA GPUs via NVLink-Fusion for CPU-GPU coherence. This silicon-to-software stack minimizes data movement overhead, boosts memory bandwidth, and curtails latency in agentic workloads that fuse reasoning, retrieval, simulation, and control.

Quantitatively, NVLink-Fusion delivers up to 35x performance boosts in quantized inference and 1.6x speedups through optimized weight reordering, as demonstrated in ARM-based HPC benchmarks for scientific ML surrogate models. These gains translate to a 16% reduction in execution time at the benchmark level, making local deployments not just viable but economically superior for sustained AI operations.

Nutanix's hyperconverged infrastructure (HCI) synergizes with PRIMERGY servers to form sovereign clusters that eliminate cloud egress fees—often 20-30% of annual AI budgets—and mitigate vendor lock-in risks. In a typical enterprise scenario, migrating a 70B-parameter LLM from AWS or Azure to a Nutanix-PRIMERGY cluster reduces inference costs by 40-60% over three years, factoring in hardware amortization, power efficiency, and eliminated data transfer charges.

PRIMERGY's energy-efficient design, paired with MONAKA's architecture-agnostic HPC AI solutions, achieves energy reductions critical for zetascale AI, where multimodal data volumes explode traditional power envelopes. For instance, quantized 4-bit or 8-bit LLMs on these clusters shrink GPU memory footprints from 140GB (FP16) to under 40GB, enabling deployment on fewer NVIDIA H100s or A100s while maintaining 95%+ accuracy via post-training quantization (PTQ) and quantization-aware training (QAT).

Strategically, this shift to local sovereign clusters fortifies enterprises against geopolitical data regulations like the EU AI Act and U.S. executive orders on critical infrastructure AI. Fujitsu's AI-Native Integrated Platform, with its four-layer architecture—Technology Foundation (scalable IT backbone including edge and security), Knowledge Construction (LLM-driven knowledge graphs), Business Intelligence (domain-specific agents), and AI Orchestration—provides the blueprint for this sovereignty.

The Technology Foundation layer, hosted on Nutanix-PRIMERGY, ensures centralized governance for cost efficiency and security, while upper layers enable flexible, domain-owned AI innovation. RAG integration amplifies this by embedding enterprise-specific knowledge bases directly into local vector databases (e.g., Milvus or FAISS on Nutanix), reducing hallucination rates by 70% and latency from 500ms (cloud) to 50ms (local NVMe-backed retrieval).

GPU memory reduction techniques are pivotal: Fujitsu's multi-agent optimizations, including the Takane AI model delivered as NVIDIA NIM microservices, leverage advanced quantization (e.g., GPTQ, AWQ) to compress models without retraining. For a Llama-3 70B model, 4-bit quantization halves KV-cache memory during long-context inference, critical for RAG pipelines processing 128k+ token windows.

On PRIMERGY with NVLink-Fusion, this yields 2.9x inference speedups, enabling real-time enterprise applications like autonomous procurement form checks or safety hazard detection in field operations via Kozuchi AI. Enterprise Sovereign AI extends this to multi-agent ecosystems, where specialized agents (e.g., Attack AI, Defense AI, LLM Vulnerability Scanner) operate in closed-loop security on local clusters, scanning 7,000+ malicious prompts across 25 attack vectors without cloud telemetry leaks.

This Layer 4 pivot dismantles cloud-dependency's hidden taxes—latency spikes, compliance audits, and scalability throttles—unleashing autonomous enterprise efficiency. Fujitsu's Zinrai and Kozuchi platforms further embed predictive ML for machine failure anticipation with 96.7% accuracy, now supercharged on sovereign hardware. The result: a 3-5x uplift in operational velocity, where AI agents drive end-to-end processes with minimal human oversight, birthing truly sovereign AI that ends human-coded inefficiencies. Economically, ROI materializes in 6-12 months for pilot workloads; strategically, it positions enterprises as AI overlords in a fragmented global landscape.

In sum, moving LLMs to Nutanix-PRIMERGY clusters via quantization, RAG, and NVLink-Fusion not only craters costs but rearchitects power dynamics, rendering cloud giants obsolete relics in the Autopilot Uprising.

Layer 5: The Future

In the wake of the Fujitsu Autopilot Uprising, Layer 5 heralds a paradigm shift where autonomous AI agents supplant human oversight in governance, legislation, and software creation, birthing an era of Sovereign AI that renders traditional software engineers obsolete. This future, projected to fully materialize by 2030, leverages Fujitsu's cutting-edge platform innovations—including quantization for GPU memory reduction up to 94%, Retrieval Augmented Generation (RAG) for domain-specific accuracy, and enterprise-grade sovereign AI deployments—to enable self-evolving systems that operate independently across scales from edge devices to national infrastructures.

Central to this vision is Fujitsu's newly launched AI platform, announced in January 2026, which orchestrates the entire generative AI lifecycle with unprecedented autonomy. Built around the high-precision Takane large language model, optimized for Japanese performance and image analysis, the platform integrates quantization technologies that drastically lower memory footprints and computational costs.

Specifically, these techniques compress model parameters—reducing precision from 32-bit floating-point to 8-bit or 4-bit integers—yielding up to 94% GPU memory reduction while preserving inference accuracy above 95% for enterprise workloads. This efficiency is pivotal for deploying Enterprise Sovereign AI, where models run on-premises or in air-gapped environments, immune to cloud dependencies and foreign data sovereignty risks.

Autonomous AI agents, empowered by this platform's low-code/no-code framework and Model Context Protocol (MCP), will infiltrate government operations by 2028. Imagine legislative drafting agents that ingest vast corpora of legal precedents via RAG pipelines: these systems retrieve contextually relevant statutes from vector databases (e.g., FAISS-indexed repositories exceeding 10TB), augment prompts to Takane, and generate bills with hallucination rates below 0.5%—thanks to Fujitsu's guardrail technologies scanning over 7,700 vulnerabilities, including prompt injections and anomalous outputs.

In an event-driven architecture, as outlined in Fujitsu's AI integration strategies, agents trigger workflows autonomously: a policy proposal event propagates via inter-agent communication, invoking NVIDIA-integrated NVLink-Fusion for real-time simulation on Fujitsu's MONAKA CPU-GPU clusters, ensuring bills pass fiscal impact analyses before human verification.

Government adoption accelerates with Fujitsu-NVIDIA's self-evolving agent platform, fusing Kozuchi's AI workload orchestrator with NVIDIA NeMo for continuous fine-tuning. Agents in legislative roles employ RAG to ground outputs in sovereign data lakes, mitigating biases through automated rule generation that adapts guardrails dynamically.

For instance, a parliamentary AI agent could process citizen feedback from 1 million IoT sensors in real-time, using pattern discovery from Fujitsu's Zinrai platform—achieving 96.7% accuracy in sentiment-to-policy mapping, surpassing human legislators—while predicting legislative outcomes via predictive optimization layers. This extends to physical AI for border control or urban planning, where robotics agents, lightweighted via quantization, execute domain-tuned decisions with zero human coding intervention.

The death knell for the traditional software engineer sounds decisively here. Fujitsu's platform, with its AI agent framework supporting multi-agent cooperation, obsoletes manual coding by 2030. Development velocity surges 10x through no-code orchestration: engineers' roles evaporate as agents containerize proprietary technologies on-demand, handling everything from vulnerability patching to deployment via MCP-interfaced pipelines.

Enter the Sovereign Architect—a new archetype blending human oversight with AI co-creation. These rare specialists design high-level governance layers: defining KPIs for operations management, sculpting event-driven microservices, and tuning RAG retrievers for sector-specific sovereignty (e.g., healthcare workflows via digital twins). Unlike coders tethered to IDEs, Sovereign Architects wield declarative interfaces, specifying intents like "optimize tax code for equity under EU GDPR"—triggering Takane's fine-tuned inference on MONAKA-NVIDIA substrates, yielding executable legislation in hours.

Integration with TekinGame's vision amplifies this uprising. TekinGame's gamified simulation ecosystems—envisioned as metaverse-scale sandboxes for policy testing—seamlessly mesh with Fujitsu's agentic infrastructure. RAG-enhanced agents pull TekinGame's procedural worlds as training data, enabling legislative simulations where virtual economies self-regulate via quantized multi-agent swarms. GPU memory efficiencies ensure these run on edge hardware, democratizing sovereign AI for municipal governments.

Fujitsu's sustainable AI modernization tools, like PROGRESSION's COBOL-to-Java refactoring augmented by watsonx-like LLMs, further align: legacy government mainframes transmute into agentic cores, with RAG customizing outputs for TekinGame's interactive recommendation engines—predicting societal impacts with 98% fidelity. This synergy births "naturally evolving systems," where human-in-the-loop verification fades, offloading routine governance to agents that learn from TekinGame feedback loops, fostering an AI industrial revolution.

Challenges persist: ensuring inter-agent trust via blockchain-anchored provenance for RAG sources, scaling quantization without latency spikes (targeting less than 50ms inference on 100B-parameter models), and governing Sovereign Architects through certification in AI ethics layers. Yet, Fujitsu's roadmap—preliminary quantization trials from February 2026, full launch July 2026—positions it as the vanguard. By 2032, 80% of global legislation will emerge from these autonomous pipelines, cementing the Autopilot Uprising's legacy: humanity's coding era ends, sovereignty reborn in silicon.